Tool Calling Is Not a Solved Problem

Tool calling refers to LLMs' ability to invoke functions by generating structured output. This allows LLMs to extend their abilities beyond text generation, enabling them to perform actions such as retrieving information, executing code, or integrating with third-party services in response to user queries. It is hard to overstate the significance of tool calling for LLMs. Modern AI applications rely on tool calling to interact with the environment. Tool calling is the underlying mechanism for LLMs to call APIs and MCPs, which in turn give them access to the internet, a coding environment, and more. Improving tool calling ability has become a central component of any LLM post-training. One of the major obstacles to the initial adoption of DeepSeek R1 was the lack of tool calling ability. In this blog post, I will first give an overview of the prompt templates that enable function calling. Then I will present established patterns of function calling. I will also go over two patterns of agent designs that offer different takes on function calling, ReAct and CodeAct. Finally, I describe lessons I learned from implementing these patterns.

Add function-calling to LLMs

To enable LLMs to call tools, they need to generate structured output that can be parsed as function calls. At the end of the day, LLMs generate text, which is inherently unstructured. For LLMs to call tools, they need to talk in a language that the tools can understand. In the realm of software engineering, any tools or programs expose a set of APIs to the environment outside. Therefore, LLMs need to understand and generate structured output that can serve as API calls. Given the unstructured text generation of pre-trained models, this means first training LLMs to generate structured content. Which is to say, tool calling can be seen as a byproduct of structured output.

Enabling structured output is a two-step process. First, during post-training, fine-tune the model with a dataset of function calls. Through this process, LLMs learn to generate function calls following the patterns in the training data. These datasets contain examples of question-answering tasks where LLMs use tools to resolve user queries. A sample sequence from Nous Research's hermes-function-calling-v1 dataset is provided below, where each entry is a trace of LLM calls generated by a more mature model.

<bos><start_of_turn>human

You are a function calling AI model. You are provided with function signatures within <tools></tools> XML tags.You may call one or more functions to assist with the user query. Don't make assumptions about what values to plug into functions.Here are the available tools:

<tools> [

{

'type': 'function',

'function': {

'name': 'get_news_headlines',

'description': 'Getthelatestnewsheadlines',

'parameters': {

'type': 'object',

'properties': {

'country': {

'type': 'string',

'description': 'Thecountryforwhichheadlinesareneeded'

}

},

'required': [

'country'

]

}

}

}

]

</tools>

Use the following pydantic model json schema for each tool call you will make: {'title': 'FunctionCall', 'type': 'object', 'properties': {'arguments': {'title': 'Arguments', 'type': 'object'}, 'name': {'title': 'Name', 'type': 'string'}}, 'required': ['arguments', 'name']}For each function call return a json object with function name and arguments within <tool_call></tool_call> XML tags as follows:

<tool_call>

{tool_call}

</tool_call>

Also, before making a call to a function take the time to plan the function to take. Make that thinking process between <think>{your thoughts}</think>

Can you get me the latest news headlines for the United States?<end_of_turn><eos>

<start_of_turn>model

<think>Okay, so the user asked for the latest news headlines in the United States. I need to figure out how to respond appropriately using the tools available.

Looking at the tools provided, there's a function called get_news_headlines. That seems perfect for this situation. It requires a country code, which the user specified as the United States.

Since the user is asking specifically about the US, I'll use the country parameter as 'United States' in the function call. This should fetch the relevant news for them.

I should make sure to format the response correctly as a JSON object with the function name and arguments.

</think><tool_call>

{'name': 'get_news_headlines', 'arguments': {'country': 'United States'}}

</tool_call><end_of_turn><eos>

<start_of_turn>tool

<tool_response>

{'headlines': ['US President signs new bill into law', 'Major earthquake hits California', 'NASA announces new Mars mission', 'Tech giant releases new smartphone', 'US economy shows signs of recovery']}

</tool_response><end_of_turn><eos>

<start_of_turn>model

Here are the latest news headlines for the United States:

1. US President signs new bill into law

2. Major earthquake hits California

3. NASA announces new Mars mission

4. Tech giant releases new smartphone

5. US economy shows signs of recovery<end_of_turn><eos>Note that this is a multi-turn conversation between a user and a model, where the user provides instructions on how to call tools through system instructions as well as a tool called get_news_headlines. The LLM answers the user's question by first calling the tool with the specified format, then the user feeds the tool's response back to the LLM using the <tool_response> tags to generate the final answer. It's important to point out that in practice, tool responses are results of code execution performed by the user. It would not make sense for LLM to make up the tool responses, which would be no different from hallucination. Through finetuning, LLMs learns from examples like this to call functions in the desired format.

However, since text generation is inherently nondeterministic, training alone cannot guarantee structured output. We need other methods to reinforce the output structure at or after inference time. These methods include various constrained decoding methods and retries. For an overview of structured output, see Chapter 2 of Chip Huyen's AI Engineering book and a survey paper by Liang et al.1

When application developers use function calling, all they need to do is supply tool definitions in the format of a model's training data. This is achieved through chat templates, which structure users' messages, including system prompts, user queries, and function definitions. Based on its prior learning, the model will hopefully pattern match and use these tools to solve problems.

Tool calling patterns for agents

Implementing tool calling is closely linked to designing agents. Tool calling enables LLMs to operate in an expanded environment such as a browser or a coding environment. At present, the most popular agent applications are variations of a chat application, where a user talks to an agent system and assigns tasks. The interfaces of these applications vary from a conventional chat application (chatGPT), a chat interface embedded in an IDE (Cursor and Windsurf), as well as an agentic command tool (Claude Code, codex, and Gemini cli). The type of interactions in these applications is called *multi-turn* conversations, an interactive conversation where a user and an agent talk back-and-forth. At each turn, the agent might take several steps to address a user query with multiple tool calls.2 With a task like implementing a feature, an agent first calls an edit tool to write to files; then it executes the files to check for errors. It will continue this loop until errors are fixed. In this multi-step process, the human does not provide additional instructions to the agent. For a long-running task like this, the agent sometimes fails the task, thus it is stuck in a loop of solving errors and making new ones. There are several reasons for this. One is the agent's innate inability to solve complex problems. In this case, designing better systems around the agent cannot solve the problem. Another cause is that the agent loses track of the task as the task executes for a long period. To address problems like this and make agents solve harder problems, multiple solutions have been proposed. In the rest of the article, I will go over ReAct and CodeAct, two agent design paradigms that use novel combinations of reasoning and tool calling to achieve better agent performance.3

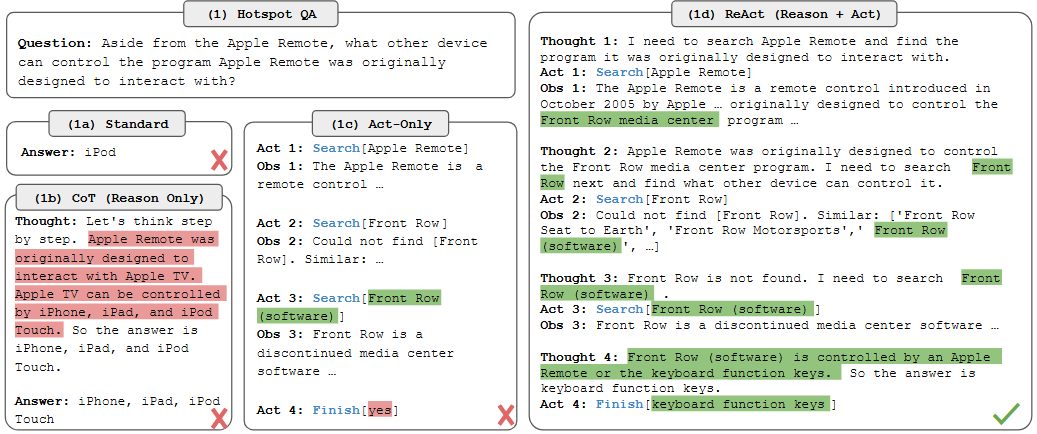

A cornerstone of agent designs, ReAct introduced a pattern where interweaving reasoning and tool calling steps can significantly improve an LLM's ability to solve complex tasks.4 ReAct is a short hand for reason and act (tool calling). The authors contrast ReAct with two previous design patterns, reasoning-only and act-only, as shown in the figure below.

For a task that requires a multi-step solution, Chain of Thought (CoT) provides intermediary reasoning steps before arriving at the final solution; alternatively, the Act-only paradigm leverages LLM's tool calling ability to solve problems. In the example above, CoT gives a wrong answer based on reasoning alone, while Act-only stops mid-way through the task. ReAct combines reasoning and tool calls at each step and was able to provide the correct answer. ReAct has become the mainstream paradigm for designing agents. The Nous Research's sample trace above adopts this pattern. If you look at how ChatGPT or Cursor accomplish a task, they all broadly follow this paradigm. While the user receives a coherent response from the LLM with reasoning and tool calls, behind the scenes, the LLM has been called multiple times to incorporate responses of tool calls such as web search and code executions.

As the name suggests, CodeAct is in direct conversation with ReAct, where the authors propose to call tools with executable Python code.5 As the trace from Nous Research suggests, most ReAct uses JSON schema to call tools:

<tool_call>

{'name': 'get_news_headlines', 'arguments': {'country': 'United States'}}

</tool_call>CodeAct posits that tool calling with JSON schema allows only one tool call per action, which is inefficient. Instead, the authors prompt LLMs to generate Python code that incorporates the tool calls in the code. The versatility of code introduces control flow that allows a function to be called multiple times in the same action. Executable code also significantly augments what a single tool can accomplish by bringing other features of a coding language, such as combining multiple tools in the same action and managing intermediary results. As an illuminating example in the paper shows, a multi-step task for ReAct can be accomplished in a single step in CodeAct:

In many use cases where agents are expected to perform one action at a time, like browsing the web (open a page -> perform a click -> look up a term), CodeAct behaves similarly to ReAct. But for cases where tool calls can be optimized with control flows, CodeAct is a more efficient alternative than ReAct.

Patterns in practice

In the past few weeks, I have been experimenting with ReAct and CodeAct agent patterns. And patterns have practical limitations in practice. For ReAct, because tool calling behaviors vary across LLM providers, it is hard to guarantee consistent ReAct behaviors across LLM models. For CodeAct, while the idea of writing code to replace JSON schema sounds appealing, the reality is that many CodeAct agents are built on top of the existing infrastructure of JSON tool calls.

There are many ways to implement the ReAct pattern. The original implementation by the authors of the paper is through prompting alone. The authors give a few-shot examples of the ReAct patterns that separate each of the thinking, action, and observation components in tags and instruct the LLMs to follow. Unlike the current paradigm of tool calling, the prompt does not include a list of actions/tools the agent can use, but includes many complete traces to showcase the actions available. The output is then processed by detecting keywords like "Action" and "Observation". The advantage of this method is to allow models to follow patterns purely through text output, without worrying about the reliability and built-in variations of function calling across models. For some tasks, the thinking step is implemented as an action called "thinking", which is not so different from the "thinking tool" popularized by Claude. It is worth noting that the original ReAct implementation does not use JSON format to call tools, since function calling with JSON was not standardized when the paper came out. But the actions were called one at a time, which is not different from using a JSON schema. Despite the prevailing implementation of function calling with native tool calls, tool calling through prompting remains a valid option. While parsing the output can be laborious, the advantage of prompting is its versatility. With a shot prompting, you can ask the model to produce any combination of tool calls and reasoning steps, which is difficult to achieve with native tool calls.

A more sensible alternative to implement ReAct nowadays is through built-in function calling. Function calling through a built-in mechanism only requires a description of the tools.6 Compared with prompting, implementing is a lot simpler. Because function calling is supported natively, there is no need to give examples of guiding models' behavior. The problem, however, is to instruct the model to output thoughts and call functions at the same time. Although models are now quite capable of calling tools, many models are not trained to produce reasoning and call tools at the same time (this includes Llama 3.3, and sometimes gpt-4o as well). Prompting the model to generate reasoning steps while calling tools won't work in these scenarios. Since it is unclear how a model is trained to behave when calling tools, this implementation won't work consistently across models. Another method I tried was using a few-shot examples and stopping words to force LLMs to generate thoughts and call actions with two consecutive requests. This is expensive and extremely unstable.7 Both attempts are illustrated in the figure below.

Implementing CodeAct shares the same design questions as ReAct. Following the CodeAct agent from HuggingFace's smolagent, I used structured output to force models to generate reasoning and code at each step.8 But CodeAct can also be implemented with native function calling using a Python interpreter tool that executes code in a sandbox. A set of functions can be passed to the LLM through prompting to inform it of the set of APIs it can use in the code, serving as the equivalent of tools in ReAct. In the latter method, while traditional JSON-style tool calling is used, the Python interpreter tool serves as an environment of the agent, while the tools' LLMs used to address specific problems are passed through prompts alone, rather than native tool calls.

In conclusion, tool calling is a trained behavior from LLM, closely linked to structured output. The LLM only generates JSON/code that calls the tool, it is up to the user to execute it. Agent design paradigms ReAct and CodeAct offer two distinct visions of integrating tool calling for agents with JSON and Python code respectively. In practice, both of these patterns can be implemented in a variety of ways. Developers will have to weigh the pros and cons of different patterns and implementations as they try to build agents that are both powerful and reliable.

Liang, Xun, Hanyu Wang, Yezhaohui Wang, Shichao Song, Jiawei Yang, Simin Niu, Jie Hu et al. "Controllable text generation for large language models: A survey." arXiv preprint arXiv:2408.12599 (2024).

Mao et al. “BFCL V3, Multi-Turn & Multi-Step Function Calling Evaluation”, https://gorilla.cs.berkeley.edu/blogs/13_bfcl_v3_multi_turn.html

In academic literature, what I refer to as "agent design patterns" are often called prompting techniques. The latter term is in line with Chain of Thought prompting and other prompting techniques. As a research project, ReAct is in direct conversation with CoT. Here, I categorize ReAct and CodeAct as agent design patterns to emphasize their engineering aspects. ReAct and CodeAct represent two paradigms of designing agents that involve distinct takes on tool calling. Other aspects of agent design include memory management and multi-agent patterns. In recent discussions, the term "context engineering" refers to a similar set of problems.

Yao, Shunyu, Jeffrey Zhao, Dian Yu, Nan Du, Izhak Shafran, Karthik Narasimhan, and Yuan Cao. "React: Synergizing reasoning and acting in language models." In International Conference on Learning Representations (ICLR). 2023.

Wang, Xingyao, Yangyi Chen, Lifan Yuan, Yizhe Zhang, Yunzhu Li, Hao Peng, and Heng Ji. "Executable code actions elicit better llm agents." In Forty-first International Conference on Machine Learning. 2024.

To be precise, native tool calling also passes tool definitions through prompt through the underlying chat templates, but the user no longer needs to compose a system prompt explicitly. The terminology of prompting versus tool calling is taken from the Berkeley Function-Calling Leaderboard: https://gorilla.cs.berkeley.edu/blogs/8_berkeley_function_calling_leaderboard.html.

One thing I learned from this exercise was when calling LLMs in a loop, always expect them to behave in the same way (same type of output with different content) with each call; it's impossible to instruct to behave differently (tool calls on one and reasoning on another). If you need different types of output, then you should use a different design patterns than a loop.

See HF’s blog: https://huggingface.co/blog/structured-codeagent